I saw this tweet going viral

How are rising mathematicians and physicists coping with the imminent advancements in AI? I have personally met folks who are working on integrating LLMs with proof verification languages (such as Lean) that project under a 2 year time horizon before LLMs can generate proofs and…

— sarah (@Saraht0n1n) March 4, 2025

A day does not go by without breathless proclamations about how AI will render millions of people unemployed and even render entire industries obsolete. Whether it’s healthcare, law, math, writing, art, or science–AI is coming for it all. A month ago Bill Gates predicted within 10 years “…AI will replace many doctors and teachers—humans won’t be needed ‘for most things'”.

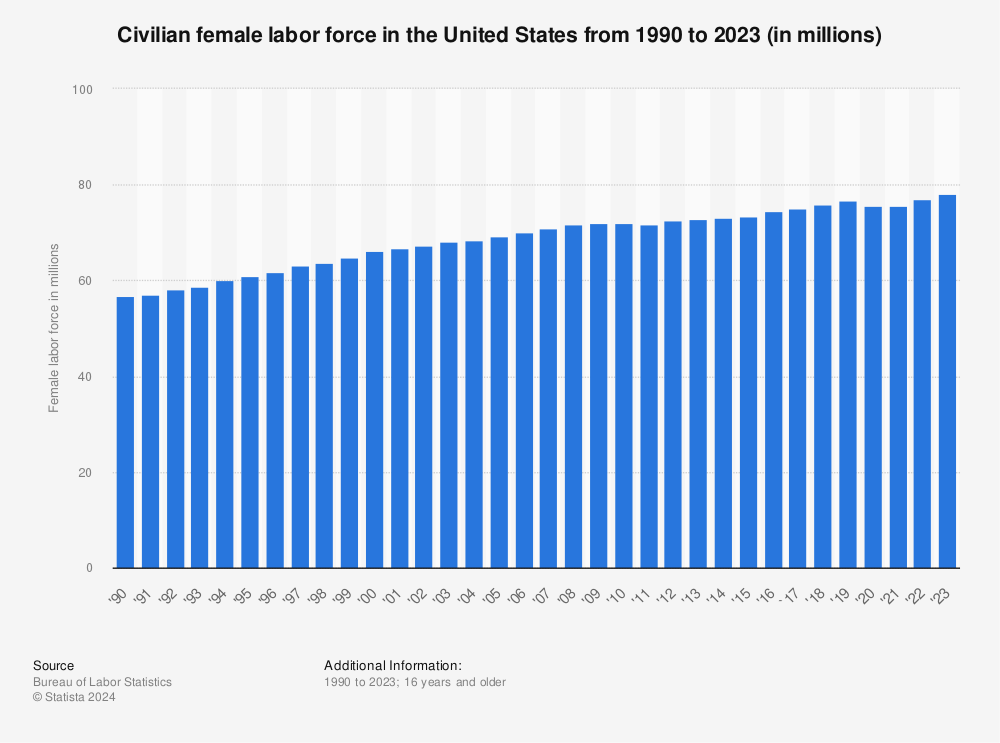

My take is, I don’t think anything close to those dire scenarios will happen. There will be better models and incremental advances, but no “mass unemployment”. Regarding AI destroying jobs, job creation and destruction is part of the normal functioning of a healthy economy. Jobs are always being created and destroyed. Indeed, the size of the US labor force has not fallen even as AI has become more powerful in recent years:

Only Covid caused a tiny, albeit temporary, dip. This is not to say it cannot happen, but I think it’s time to give the doomsaying a rest, as the evidence doesn’t support it.

People tend to underestimate the resilience of many jobs. Even jobs that one would assume are the most vulnerable to large language models, such as anything involving writing, have held up well and are even thriving.

For example, on Substack, from my own observations, the top writers are still seeing record revenue and engagement, such as ‘likes’ and comments. (Because Substack internal traffic metric are private, the only way to measure traffic to a particular author with with visible engagement metrics.) Three years later, GPT-4, Claude, Gemini etc. have not put a dent in this. One would naively assume that language models would be an acute threat to this business model, but evidently not.

In 2024, the highest paid 27 authors on Substack earned a collective $22 million. This represent an arbitrage-like opportunity to use language models to syphon-off some of this revenue at scale by writing blogs using text prompts, but as far as I know, it hasn’t happened. Language models cannot copy the subtle nuance of the style of top writers, or the branding or audience. Success at writing is much more than just producing decent prose.

How about coders? Surely, AI will make coders, doctors, or lawyers obsolete according to the experts who for the past 3 years have insisted that this would happen ‘any day now’. As it turns out, these jobs are also surprisingly resilient to automation. Lawyers still have to represent clients in court and deal with the other intricacies of law. Even if AI can parse legal documents at scale, an expert is still needed to ensure it was done correctly, or to distill it.

When people talk about AI automating doctors, they seem to mean triage, like collecting symptoms and mapping it to a diagnosis, but this is more akin to a nurse. A doctor does much more. Doctors run clinal trials, operate on patients, prescribe drugs based on individual patient needs, and run invasive diagnostics such colonoscopies and biopsies, which cannot be automated.

Rather than coders being obsoleted, they will be repurposed, such as to edit and debug AI-generated code, or to program the AI themselves. According to coders on Reddit, app development is still difficult despite AI. AI is like a tool. It cannot actually create the product or deal with the nuances of development. Apps have many moving parts, compared to a static webpage. They have to be responsive. Hence, why coders are still paid a lot to cover the gaps that AI cannot fill.

Much of this doomsaying, I suspect, is motivated by a desire for equality, in which AI levels the playing field or otherwise makes society more equal. However, there is no evidence any of those things is happening, nor much compelling evidence there will be an “end of everything” due to AI.

I also cannot find any evidence also of smarter people losing relative status; again, it seems to be the opposite. Top music acts continue to draw record revenue through touring and downloads. Same for actors, athletes, or writers–the biggest names continue to draw record-sized paychecks and popularity.

If anything, people who are already successful, wealthy, and have connections and sought skills we be better-able to adapt to AI, compared to low-skilled workers or those who lack cultural capital, who will be among the first on the chopping block if the predicted mass-unemployment happens.

Someone who is smart enough to get a STEM degree can adapt to AI by learning a new skill, as IQ and skill acquisition are correlated, whereas someone who is not as smart will struggle at learning new skills. This is why the “learn to code” initiative was rightfully ridiculed; middle-aged guys laid off from factories cannot just suddenly make the leap to the abstraction of code. Even experts who code for a living find it hard.

The fact that FAMNG+ wages and quant/finance wages are the highest ever despite 3+ years of language models, is evidence against such obsolesce or a leveling of the playing field. Although low-skilled jobs can be hard to automate with robots, they are still vulnerable due to other macro forces like immigration.

AI does many amazing things and can make individuals more productive. I use ChatGPT myself, such as generating LaTeX code for Overleaf, and tightening up writing, but I cannot envision it actually taking over someone’s job, except maybe some ghostwriting, which is tiny relative to the size of the overall US economy. I think it’s time to give the doom and gloom a rest until the evidence becomes clearer.